UVI Image Segmentation of Auroral Oval: Dual Level Set and Convolutional Neural Network Based Approach

Abstract

:1. Introduction

2. Related Works

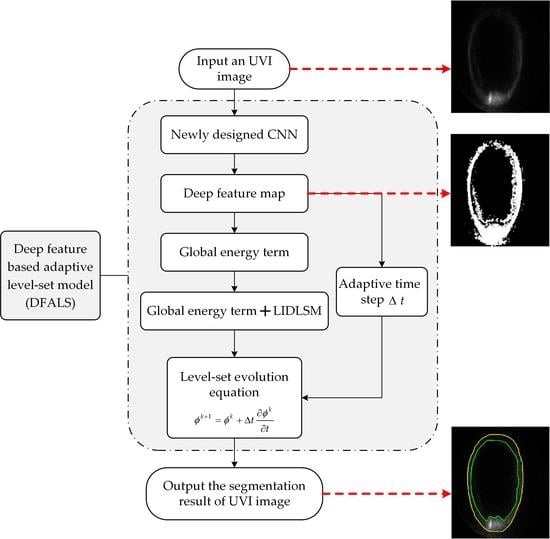

3. Deep Feature-Based Adaptive Level Set Model (DFALS)

3.1. CNN Model Design

3.2. Constructing the Global Energy Term

3.3. Constructing Adaptive Time-Step

3.4. Implementation of the DFALS

| Algorithm 1. Deep feature-based adaptive level set model (DFALS). |

| Preprocessing: Train the CNN with the training samples, and then construct each UVI image’s corresponding deep feature map with the trained CNN. |

| Input: An original UVI image and the corresponding deep feature map |

| Output: The segmentation result of the auroral oval image |

|

4. Experiment and Results

4.1. UVI Data Set

4.2. Robustness to Contour Initializations

4.3. Comparison with Other Methods

4.3.1. Subjective Evaluation

4.3.2. Objective Evaluation

- Boundary-based measurement

- Region-based measurement

5. Conclusions

- The comparative experiment between the LIDLSM and DFALS models demonstrate that the proposed method owes stronger robustness to different contour initializations.

- It can be seen from the subjective evaluation experiments that the auroral oval region extracted by DFALS agrees well with the experts’ annotated benchmark.

- Compared with the RLSF, SPFLIF-IS, SIIALSM, and LIDLSM models, the DFALS model improves the auroral oval segmentation accuracy in the intensity inhomogeneous region of the UVI images. Furthermore, the DFALS model also obtains the best performance in terms of the boundary- and region-based evaluation metrics.

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Boudouridis, A.; Zesta, E.; Lyons, L.R.; Anderson, P.C.; Lummerzheim, D. Enhanced solar wind geoeffectiveness after a sudden increase in dynamic pressure during southward IMF orientation. J. Geophys. Res. Space Phys. 2005, 110. [Google Scholar] [CrossRef] [Green Version]

- Clausen, L.B.N.; Nickisch, H. Automatic Classification of Auroral Images From the Oslo Auroral THEMIS (OATH) Data Set Using Machine Learning. J. Geophys. Res. Space Phys. 2018, 123, 5640–5647. [Google Scholar] [CrossRef]

- Yang, Q.; Tao, D.; Han, D.; Liang, J. Extracting Auroral Key Local Structures From All-Sky Auroral Images by Artificial Intelligence Technique. J. Geophys. Res. Space Phys. 2019, 124, 3512–3521. [Google Scholar] [CrossRef] [Green Version]

- Akasofu, S.I. Dynamic morphology of auroras. Space Sci. Rev. 1965, 4, 498–540. [Google Scholar] [CrossRef]

- Lei, Y.; Shi, J.; Zhou, Y.; Tao, M.; Wu, J. Extraction of Auroral Oval Regions Using Suppressed Fuzzy C Means Clustering. In Proceedings of the International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6883–6886. [Google Scholar]

- Qian, W.; QingHu, M.; ZeJun, H.; ZanYang, X.; JiMin, L.; HongQiao, H. Extraction of auroral oval boundaries from UVI images: A new FLICM clustering-based method and its evaluation. Adv. Polar Sci. 2011, 22, 184–191. [Google Scholar] [CrossRef]

- Kvammen, A.; Gustavsson, B.; Sergienko, T.; Brändström, U.; Rietveld, M.; Rexer, T.; Vierinen, J. The 3-D Distribution of Artificial Aurora Induced by HF Radio Waves in the Ionosphere. J. Geophys. Res. Space Phys. 2019, 124, 2992–3006. [Google Scholar] [CrossRef]

- Ding, G.-X.; He, F.; Zhang, X.-X.; Chen, B. A new auroral boundary determination algorithm based on observations from TIMED/GUVI and DMSP/SSUSI. J. Geophys. Res. Space Phys. 2017, 122, 2162–2173. [Google Scholar] [CrossRef]

- Boudouridis, A.; Zesta, E.; Lyons, R.; Anderson, P.C.; Lummerzheim, D. Effect of solar wind pressure pulses on the size and strength of the auroral oval. J. Geophys. Res. Space Phys. 2003, 108. [Google Scholar] [CrossRef]

- Zhao, X.; Sheng, Z.; Li, J.; Yu, H.; Wei, K. Determination of the “wave turbopause” using a numerical differentiation method. J. Geophys. Res. Atmos. 2019, 124, 10592–10607. [Google Scholar] [CrossRef]

- Shi, J.; Wu, J.; Anisetti, M.; Damiani, E.; Jeon, G. An interval type-2 fuzzy active contour model for auroral oval segmentation. Soft Comput. 2017, 21, 2325–2345. [Google Scholar] [CrossRef]

- Kauristie, K.; Weygand, J.; Pulkkinen, T.I.; Murphree, J.S.; Newell, P.T. Size of the auroral oval: UV ovals and precipitation boundaries compared. J. Geophys. Res. 1999, 104, 2321. [Google Scholar] [CrossRef]

- Hu, Z.-J.; Yang, Q.-J.; Liang, J.-M.; Hu, H.-Q.; Zhang, B.-C.; Yang, H.-G. Variation and modeling of ultraviolet auroral oval boundaries associated with interplanetary and geomagnetic parameters. Space Weather 2017, 15, 606–622. [Google Scholar] [CrossRef]

- Meng, C.-I. Polar Cap Variations and the Interplanetary Magnetic Field; Springer: Dordrecht, The Netherlands, 1979; pp. 23–46. [Google Scholar]

- Liou, K.; Newell, P.T.; Sibeck, D.G.; Meng, C.-I.; Brittnacher, M.; Parks, G. Observation of IMF and seasonal effects in the location of auroral substorm onset. J. Geophys. Res. Space Phys. 2001, 106, 5799. [Google Scholar] [CrossRef] [Green Version]

- Hardy, D.A.; Burke, W.J.; Gussenhoven, M.S.; Heinemann, N.; Holeman, E. DMSP/F2 electron observations of equatorward auroral boundaries and their relationship to the solar wind velocity and the north-south component of the interplanetary magnetic field. J. Geophys. Res. Space Phys. 1981, 86, 9961–9974. [Google Scholar] [CrossRef]

- Meng, Y.; Zhou, Z.; Liu, Y.; Luo, Q.; Yang, P.; Li, M. A prior shape-based level-set method for auroral oval segmentation. Remote Sens. Lett. 2019, 10, 292–301. [Google Scholar] [CrossRef]

- Li, X.; Ramachandran, R.; He, M.; Movva, S.; Rushing, J.A.; Graves, S.J.; Lyatsky, W.B.; Tan, A. Comparing different thresholding algorithms for segmenting auroras. In Proceedings of the International Conference on Information Technology Coding and Computing, Las Vegas, NV, USA, 5–7 April 2004; pp. 594–601. [Google Scholar]

- Cao, C.; Newman, T.S. New shape-based auroral oval segmentation driven by LLS-RHT. Pattern Recognit. 2009, 42, 607–618. [Google Scholar] [CrossRef]

- Liu, H.; Gao, X.; Han, B.; Yang, X. An Automatic MSRM Method with a Feedback Based on Shape Information for Auroral Oval Segmentation. In Proceedings of the International Conference on Intelligent Science and Big Data Engineering, Beijing, China, 31 July–2 August 2013; pp. 748–755. [Google Scholar]

- Kass, M.; Witkin, A.; Terzopoulos, D. Snakes: Active contour models. Int. J. Comput. Vis. 1988, 1, 321–331. [Google Scholar] [CrossRef]

- Sun, L.; Meng, X.; Xu, J.; Zhang, S. An Image Segmentation Method Based on Improved Regularized Level Set Model. Appl. Sci. 2018, 8, 2393. [Google Scholar] [CrossRef] [Green Version]

- Niu, S.; Qiang, C.; Sisternes, L.D.; Ji, Z.; Rubin, D.L. Robust noise region-based active contour model via local similarity factor for image segmentation. Pattern Recognit. 2016, 61, 104–119. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Meng, X.; Xu, J.; Tian, Y. An Image Segmentation Method Using an Active Contour Model Based on Improved SPF and LIF. Appl. Sci. 2018, 8, 2576. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.; Gao, X.; Li, J.; Han, B. A shape-initialized and intensity-adaptive level set method for auroral oval segmentation. Inf. Sci. 2014, 277, 794–807. [Google Scholar] [CrossRef]

- Yang, P.; Zhou, Z.; Shi, H.; Meng, Y. Auroral oval segmentation using dual level set based on local information. Remote Sens. Lett. 2017, 8, 1112–1121. [Google Scholar] [CrossRef]

- Ham, Y.-G.; Kim, J.-H.; Luo, J.-J. Deep learning for multi-year ENSO forecasts. Nature 2019, 573, 568–572. [Google Scholar] [CrossRef] [PubMed]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Zhang, K.; Song, H.; Zhang, L. Active contours driven by local image fitting energy. Pattern Recognit. 2010, 43, 1199–1206. [Google Scholar] [CrossRef]

- Abdelsamea, M.M.; Tsaftaris, S.A. Active contour model driven by Globally Signed Region Pressure Force. In Proceedings of the 2013 18th International Conference on Digital Signal Processing (DSP), Santorini, Greece, 1–3 July 2013; pp. 1–6. [Google Scholar]

- Zhang, K.; Zhang, L.; Song, H.; Zhou, W. Active contours with selective local or global segmentation: A new formulation and level set method. Image Vis. Comput. 2010, 28, 668–676. [Google Scholar] [CrossRef]

- Kim, J.; Nguyen, D.; Lee, S. Deep CNN-Based Blind Image Quality Predictor. IEEE Trans. Neural Netw. Learn. Syst. 2018, 1–14. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the International Conference on International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- de Boer, P.-T.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A Tutorial on the Cross-Entropy Method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Rumelhart, D.; Hinton, G.; Williams, R. Learning Internal Representation by Back-Propagation Errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Drożdż, M.; Kryjak, T. FPGA Implementation of Multi-scale Face Detection Using HOG Features and SVM Classifier. Image Process. Commun. 2017, 21, 27–44. [Google Scholar] [CrossRef] [Green Version]

- Brittnacher, M.; Spann, J.; Parks, G.; Germany, G. Auroral observations by the polar Ultraviolet Imager (UVI). Adv. Space Res. 1997, 20, 1037–1042. [Google Scholar] [CrossRef]

- Carbary, J.F. Auroral boundary correlations between UVI and DMSP. J. Geophys. Res. 2003, 108, 1018. [Google Scholar] [CrossRef] [Green Version]

| Methods | RLSF | SPFLIF-IS | SIIALAM | LIDLSM | DFALS |

| Mean value | 7.114 | 6.221 | 6.314 | 4.614 | 1.651 |

| Standard deviation | 3.456 | 3.401 | 8.354 | 8.063 | 1.289 |

| Methods | RLSF | SPFLIF-IS | SIIALAM | LIDLSM | DFALS |

| Mean value | 3.010 | 0.200 | 1.142 | 0.600 | 0.100 |

| Standard deviation | 5.153 | 1.090 | 4.006 | 5.513 | 0.482 |

| Methods | RLSF | SPFLIF-IS | SIIALSM | LIDLSM | DFALS |

| Mean value | 31.173 | 33.927 | 33.002 | 25.484 | 16.565 |

| Standard deviation | 6.609 | 6.9569 | 17.729 | 6.610 | 3.560 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, C.; Du, H.; Yang, P.; Zhou, Z.; Weng, L. UVI Image Segmentation of Auroral Oval: Dual Level Set and Convolutional Neural Network Based Approach. Appl. Sci. 2020, 10, 2590. https://doi.org/10.3390/app10072590

Tian C, Du H, Yang P, Zhou Z, Weng L. UVI Image Segmentation of Auroral Oval: Dual Level Set and Convolutional Neural Network Based Approach. Applied Sciences. 2020; 10(7):2590. https://doi.org/10.3390/app10072590

Chicago/Turabian StyleTian, Chenjing, Huadong Du, Pinglv Yang, Zeming Zhou, and Libin Weng. 2020. "UVI Image Segmentation of Auroral Oval: Dual Level Set and Convolutional Neural Network Based Approach" Applied Sciences 10, no. 7: 2590. https://doi.org/10.3390/app10072590